Implementing a Rate Limiter

Different approaches to rate limiting

In continuation of the previous article on basics of throttling, in this article we would look at some approaches for implementing a rate limiter.

A rate limiter has to ensure certain operations are limited after a threshold is reached. As an example, cannot create more than N accounts from a single IP, cannot make more than N transactions with a credit card etc.

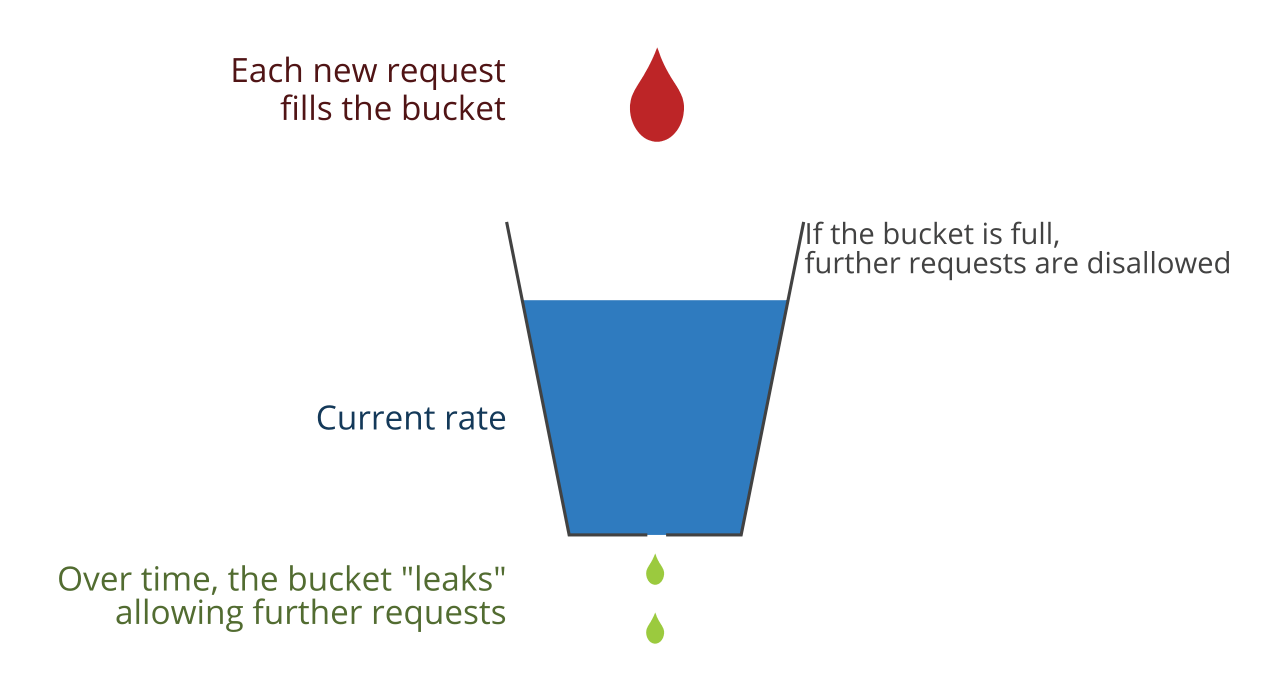

The industry standard for implementing a rate limiter is the token bucket algorithm or leaky token bucket algorithm.

Any rate limiter would need to cater to the following requirements:

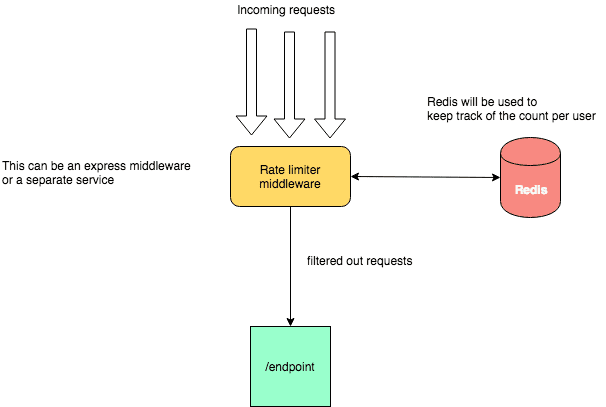

Since the web server that are handling the requests are state less and are behind a load balancer, it is important to store the data about the number of requests externally so that all instances can share the same.

Rate limiter should not slow down the request processing time considerably.

Efficiently eject stale data

Accurately limit excessive use of the API

Does not increase the memory foot print.

Its feasible to use any inmemory cache like Redis to support the first 3 requirements to store the tracking data about the number of requests per caller and its more efficient than storing the data in a database.

Token Bucket Approach

A simple implementation would be to keep a hash of the unique user, last request timestamp and the token count. Token count is used to track how many requests the caller is allowed to make. The hash of the unique user would be kept in memory.

Key - User Id - String

Value - {Tokens : 10, Timestamp : 132980 }

Whenever a request comes, the rate limiter has to fetch the hash value based on the key (user id). Based on the number of tokens available allow or block the API. When the token count drops to zero, the rate limiter blocks the user as it exceeded the limit.

The above is an example of Token bucket algorithm. In the above case the tokens are added every few seconds and so if the rate of requests is more than the available tokens then the call would be blocked.

It is very important that to ensure atomicity while implementing since there can be multiple parallel requests and the update has to be atomic. If not the rate limiter can be lenient and allow more requests than allowed.

If there was one available token for a user and that user issued multiple requests. If two different processes served each of these requests and concurrently read the available token count before either of them updated it, each process would think the user had a single token left and that they had not hit the rate limit.

Fixed Count Approach

In this approach there is a fixed counter in a given time window. In this approach record the number of requests from a user happening in the rate limit time window. In this approach maintain a hash of the user and the timestamp and the count as the value.

When a request comes find the key in the hash table for the given user, time stamp combination and would compare the count of the requests to decide whether to accept or reject the request.

Sliding Window Log

A sliding window log records the timestamp of every request in a sorted set. Every request of a given user is recorded in a single sorted set. The sorted size of the set would be equal to the number of requests in the given sliding window of time. To optimize we can remove all the timestamps that are outside the given time window. This approach is not that efficient in terms of memory as we are recording each timestamp in the given time window.

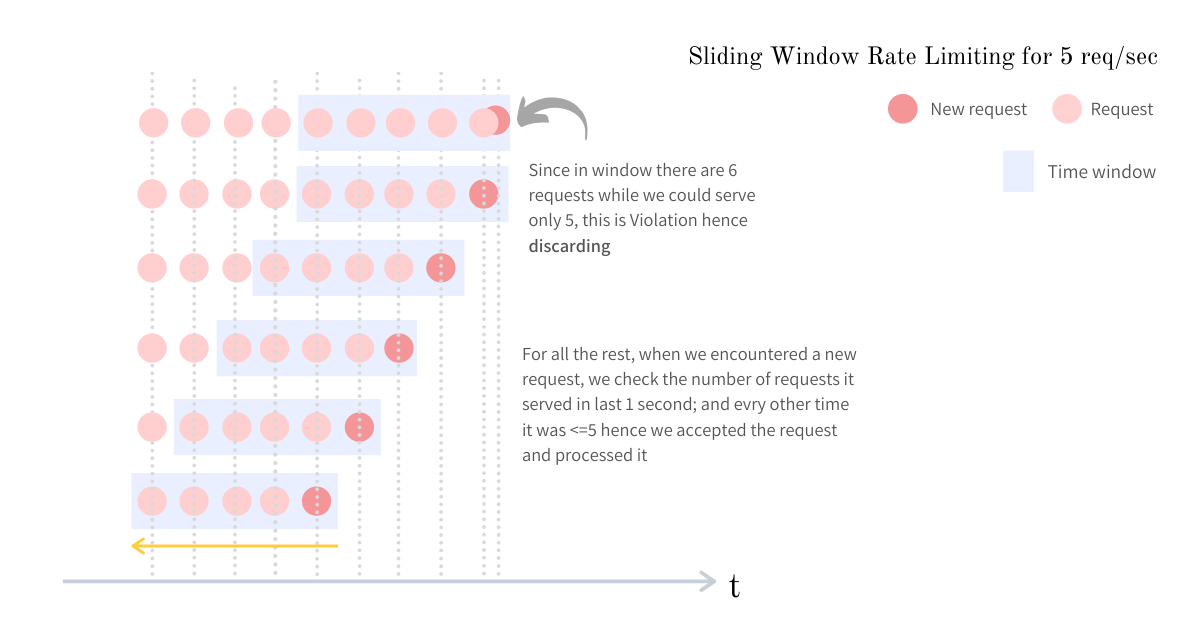

Sliding Window

The Sliding window is an hybrid approach that combines the fixed count approach and the sliding window log. Like the fixed window algorithm, track the counter for each fixed window. Next, account for a weighted value of the previous window’s request rate based on the current timestamp to smooth out bursts of traffic.

As you implement the rate limiter, it is important to inform the callers that the rate limiting has blocked the call. Traditionally, web applications respond to requests from users who surpass the rate limit with a HTTP response code of 429.